使用VideoToolbox编码和解码H.264。这篇文章就是在学习WWDC 2014 513 Direct Access to Video Encoding and Decoding的过程中写下。

在刚开始看这个视频的时候发现很不能理解,也参考的大量的博客、文章,最后才将概念一一理清,建议刚开始学习VideoToolbox的同学,可以边看视频,边看这个文章。但是这篇文章并不是按照视频的进度写的,因为Apple的视频不太适合新手学习,所以做了一些整理,整个部分分为以下几个部分。

- Common

- Swift语法中使用C的小坑

- 一些基本的关键词解释。

- Decompress && Compress

- Decompress

- 介绍如何将未解码的CMSampleBuffer解码成CVPixelBuffer。

- 解码参数的设置及注意事项。

- Compress

- 介绍如何将已经解码的CMSampleBuffer编码成CMSampleBuffer。

- 编码参数设置及注意事项。

- Decompress

- Advance

- Decompress && Compress

- Decompress

- 如何将未解码的H.264数据流封装成未解码的CMSampleBuffer。

- Compress

- 如何将解码的CMSampleBuffer编码成H.264数据流。

- Decompress

- Decompress && Compress

- Summary

- VideoToolbox硬编码及硬解码总结。

- Refrence

- 参考文章及博客。

使用VideoToolbox编码和解码都是很简单的,难的如何处理编解码后的数据,显示?写文件?传输?如果我们仅仅是将捕获的数据进行显示或者写文件,直接使用AVFoundation框架的API就可以做到,而且很简单。如果是网络传输、直播,那么我们就要用到VideoToolbox了。

Common

下面介绍一下在学习的过程中遇到的比较有难度的通用问题、专有名词等。

Swift中指针转换

由于VideoToolbox是用C进行编写的,所以在很多调用的时候需要传入自身的指针,并在Callback中拿到自身示例,在OC中比较好解决,但是在Swift中比较繁琐。

1 | /// 将self转换成指针 |

1 | /// 将self转换成UnsafeMutableRawPointer |

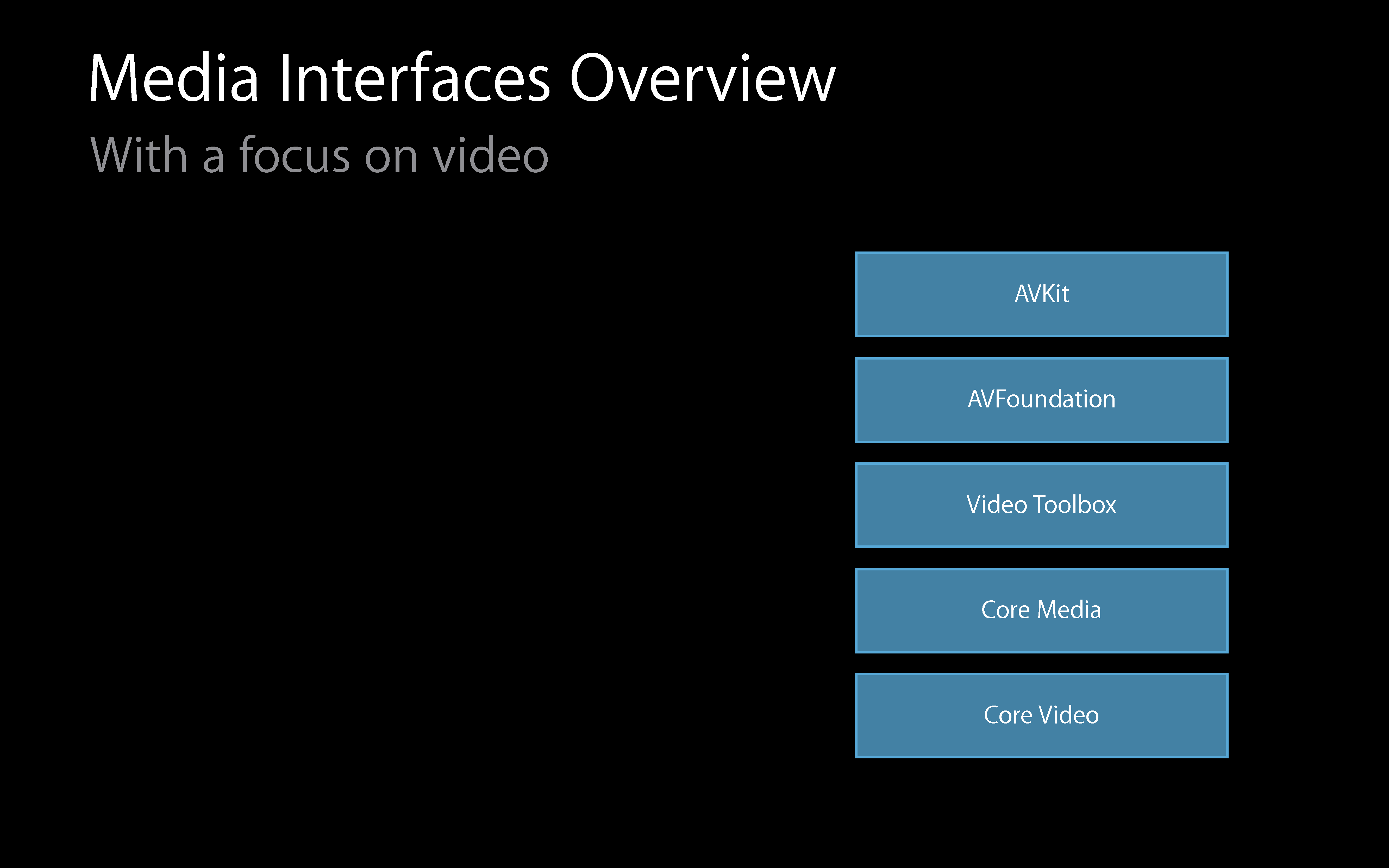

Technology

Core Video

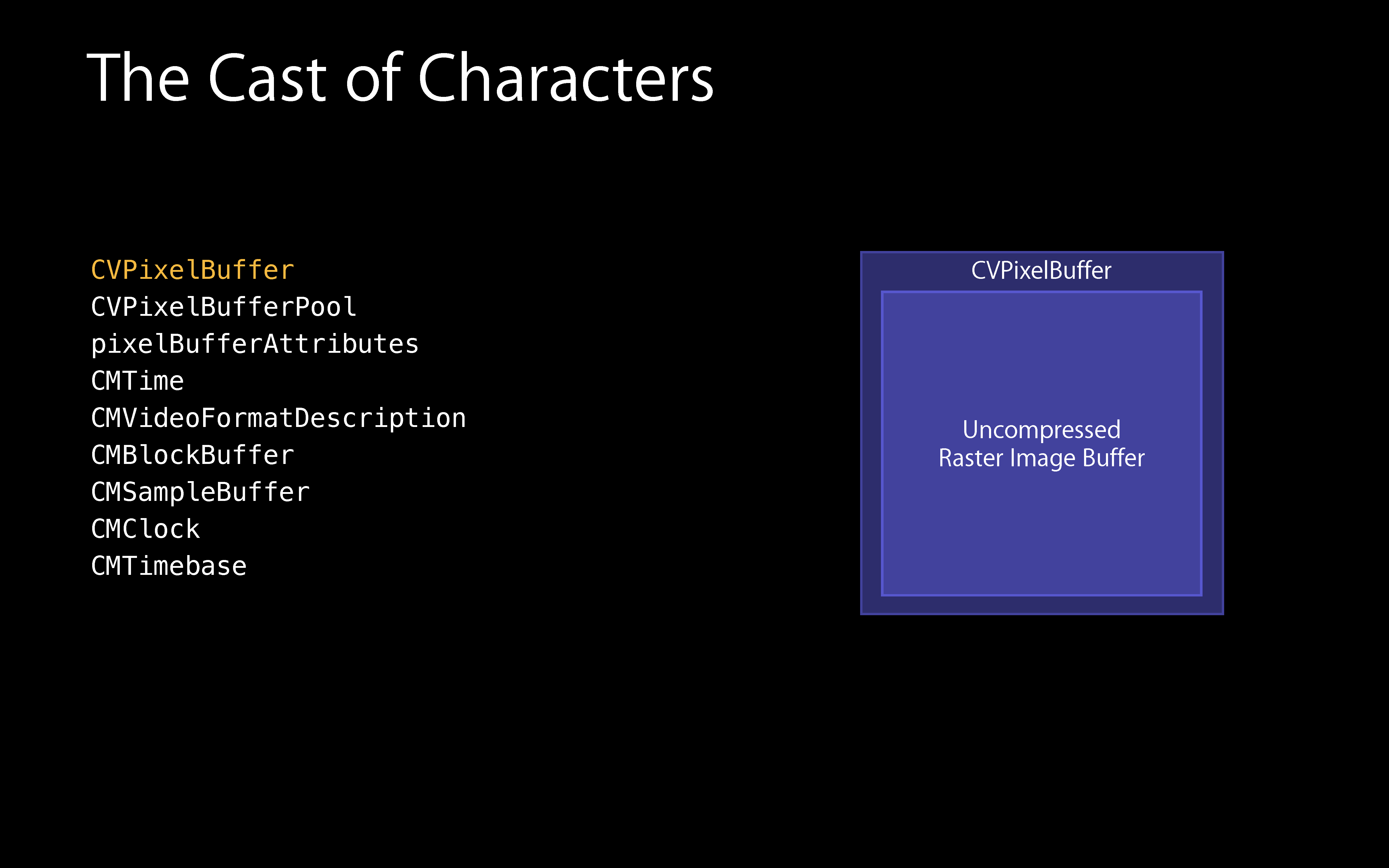

CVPixelBuffer

A reference to a Core Video pixel buffer object. The pixel buffer stores an image in main memory.

1 | typealias CVPixelBuffer = CVImageBuffer |

CVPixelBuffer是存储在内存中的一个未压缩的光栅图像Buffer。个人理解就是一张未压缩的图片,视频中的一帧。

Core Media

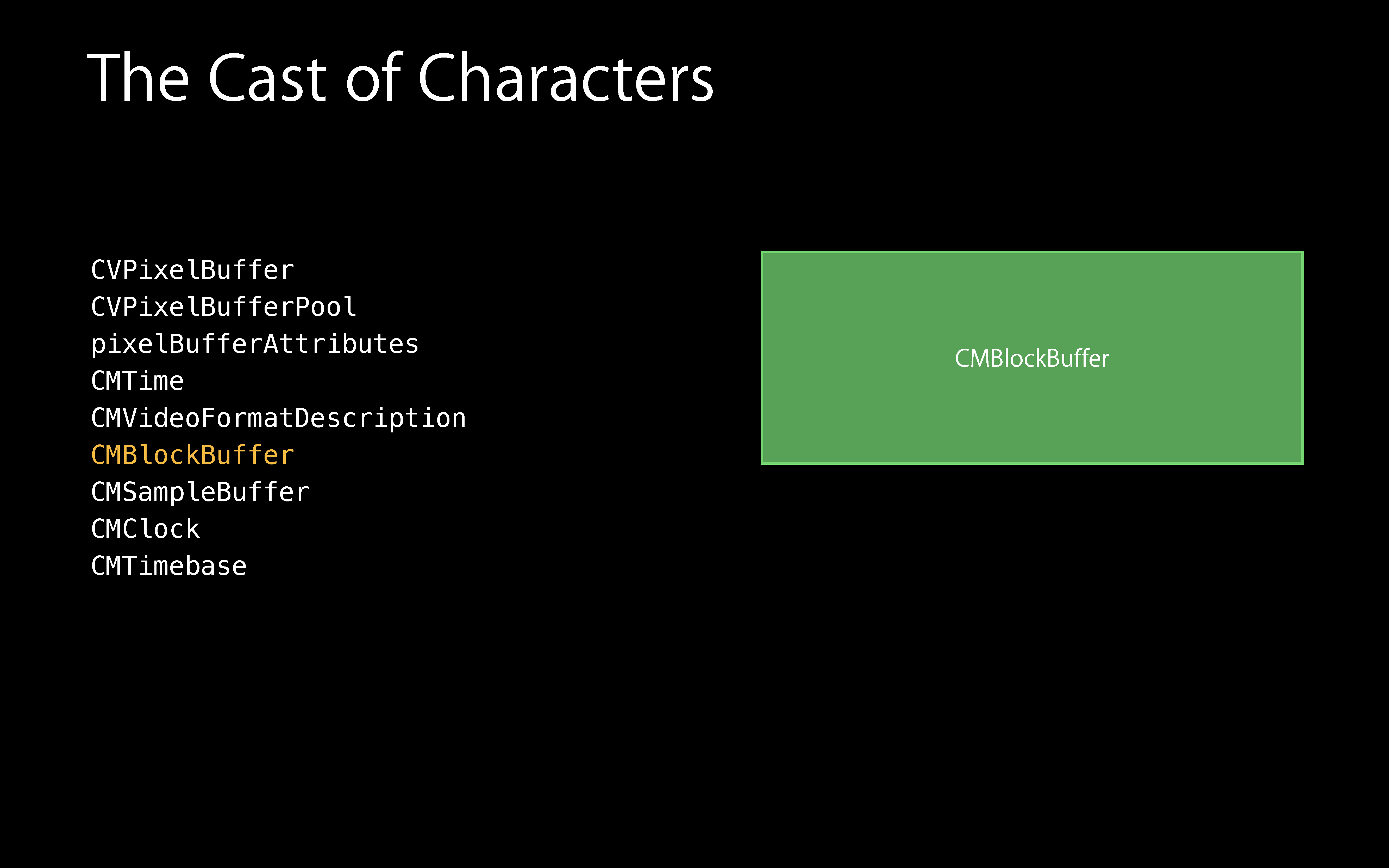

CMBlockBuffer

CMBlockBuffer is the basic way that we wrap arbitrary blocks of data in core media.

CMBlockBuffer是一个任意的Buffer,相当于Buffer中的Any。在管道中压缩视频的时候,就会把它包装成CMBlockBuffer。相当于CMBlockBuffer代表着一个压缩的数据。

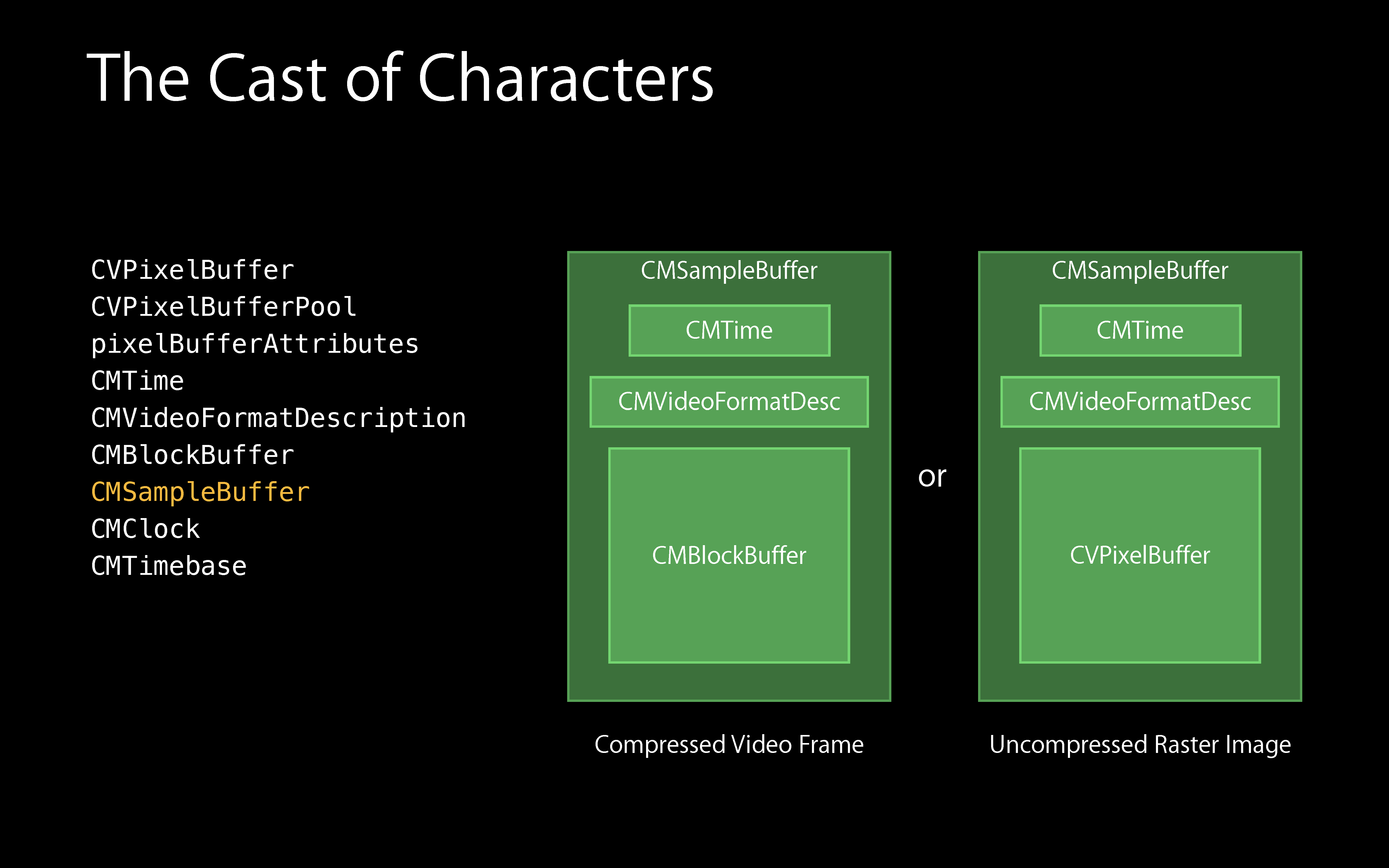

CMSampleBuffer

A CMSampleBuffer is a Core Foundation object containing zero or more compressed (or uncompressed) samples of a particular media type (audio, video, muxed, and so on).

表示

CMSampleBuffer可能是一个压缩的数据,也可能是一个未压缩的数据。取决于CMSampleBuffer里面是CMBlockBuffer还是CVPixelBuffer。

H.264 && H.265

两种编码标准,可以理解为编码协议。

H.264

H.264/AVC 项目意图创建一种视频标准。与旧标准相比,它能够在更低带宽下提供优质视频(换言之,只有 MPEG-2,H.263 或 MPEG-4 第 2 部分的一半带宽或更少),也不增加太多设计复杂度使得无法实现或实现成本过高。另一目的是提供足够的灵活性以在各种应用、网络及系统中使用,包括高、低带宽,高、低视频分辨率,广播,DVD 存储,RTP/IP 网络,以及 ITU-T 多媒体电话系统。

H.264/AVC 包含了一系列新的特征,使得它比起以前的编解码器不但能够更有效的进行编码,还能在各种网络环境下的应用中使用。这样的技术基础让 H.264 成为包括 YouTube 在内的在线视频公司采用它作为主要的编解码器,但是使用它并不是一件很轻松的事情,理论上讲使用 H.264 需要交纳不菲的专利费用。

H.265

高效率视频编码(High Efficiency Video Coding,简称 HEVC)是一种视频压缩标准,被视为是 ITU-T H.264/MPEG-4 AVC 标准的继任者。2004 年开始由 ISO/IEC Moving Picture Experts Group(MPEG)和 ITU-T Video Coding Experts Group(VCEG)作为 ISO/IEC 23008-2 MPEG-H Part 2 或称作 ITU-T H.265 开始制定。第一版的 HEVC/H.265 视频压缩标准在 2013 年 4 月 13 日被接受为国际电信联盟(ITU-T)的正式标准。HEVC 被认为不仅提升视频质量,同时也能达到 H.264/MPEG-4 AVC 两倍之压缩率(等同于同样画面质量下比特率减少了 50%),可支持 4K 分辨率甚至到超高清电视(UHDTV),最高分辨率可达到 8192×4320(8K 分辨率)。

Video Formatter

视频格式,相当于容器,将编码后的数据按照一定格式进行存储。

| Formatter | Detail |

|---|---|

| AVI | 格式(后缀为 .avi): 它的英文全称为 Audio Video Interleaved ,即音频视频交错格式。它于 1992 年被 Microsoft 公司推出。这种视频格式的优点是图像质量好。由于无损 AVI 可以保存 alpha 通道,经常被我们使用。缺点太多,体积过于庞大,而且更加糟糕的是压缩标准不统一,最普遍的现象就是高版本 Windows 媒体播放器播放不了采用早期编码编辑的 AVI 格式视频,而低版本 Windows 媒体播放器又播放不了采用最新编码编辑的 AVI 格式视频,所以我们在进行一些 AVI 格式的视频播放时常会出现由于视频编码问题而造成的视频不能播放或即使能够播放,但存在不能调节播放进度和播放时只有声音没有图像等一些莫名其妙的问题。 |

| QuickTime | 格式(后缀为 .mov): 美国 Apple 公司开发的一种视频格式,默认的播放器是苹果的 QuickTime。具有较高的压缩比率和较完美的视频清晰度等特点,并可以保存 alpha 通道。 |

| MPEG | 格式(文件后缀可以是 .mpg .mpeg .mpe .dat .vob .asf .3gp .mp4等) : 它的英文全称为 Moving Picture Experts Group,即运动图像专家组格式,该专家组建于 1988 年,专门负责为 CD 建立视频和音频标准,而成员都是为视频、音频及系统领域的技术专家。MPEG 文件格式是运动图像压缩算法的国际标准。MPEG 格式目前有三个压缩标准,分别是 MPEG-1、MPEG-2、和 MPEG-4 。MPEG-1、MPEG-2 目前已经使用较少,着重介绍 MPEG-4,其制定于 1998 年,MPEG-4 是为了播放流式媒体的高质量视频而专门设计的,以求使用最少的数据获得最佳的图像质量。目前 MPEG-4 最有吸引力的地方在于它能够保存接近于 DVD 画质的小体积视频文件。 |

| WMV | 格式(后缀为.wmv .asf): 它的英文全称为 Windows Media Video,也是微软推出的一种采用独立编码方式并且可以直接在网上实时观看视频节目的文件压缩格式。WMV 格式的主要优点包括:本地或网络回放,丰富的流间关系以及扩展性等。WMV 格式需要在网站上播放,需要安装 Windows Media Player( 简称 WMP),很不方便,现在已经几乎没有网站采用了。 |

| Real Video | 格式(后缀为 .rm .rmvb): Real Networks 公司所制定的音频视频压缩规范称为Real Media。用户可以使用 RealPlayer 根据不同的网络传输速率制定出不同的压缩比率,从而实现在低速率的网络上进行影像数据实时传送和播放。RMVB 格式:这是一种由 RM 视频格式升级延伸出的新视频格式,当然性能上有很大的提升。RMVB 视频也是有着较明显的优势,一部大小为 700 MB 左右的 DVD 影片,如果将其转录成同样品质的 RMVB 格式,其个头最多也就 400 MB 左右。大家可能注意到了,以前在网络上下载电影和视频的时候,经常接触到 RMVB 格式,但是随着时代的发展这种格式被越来越多的更优秀的格式替代,著名的人人影视字幕组在 2013 年已经宣布不再压制 RMVB 格式视频。 |

| Flash Video | 格式(后缀为 .flv):由 Adobe Flash 延伸出来的的一种流行网络视频封装格式。随着视频网站的丰富,这个格式已经非常普及。 |

| Matroska | 格式(后缀为 .mkv):是一种新的多媒体封装格式,这个封装格式可把多种不同编码的视频及 16 条或以上不同格式的音频和语言不同的字幕封装到一个 Matroska Media 档内。它也是其中一种开放源代码的多媒体封装格式。Matroska 同时还可以提供非常好的交互功能,而且比 MPEG 的方便、强大。 |

| MPEG2-TS | 格式 (后缀为 .ts)(Transport Stream「传输流」;又称 MTS、TS)是一种传输和存储包含音效、视频与通信协议各种数据的标准格式,用于数字电视广播系统,如 DVB、ATSC、IPTV 等等。MPEG2-TS 定义于 MPEG-2 第一部分,系统(即原来之 ISO/IEC 标准 13818-1 或 ITU-T Rec. H.222.0)。Media Player Classic、VLC 多媒体播放器等软件可以直接播放 MPEG-TS 文件。 |

FPS(Frames PerSecond)

每秒刷新的帧数。帧数越高,流畅度就越高。

分辨率(Resolution)

Camera中的AVCaptureSessionPreset640x480

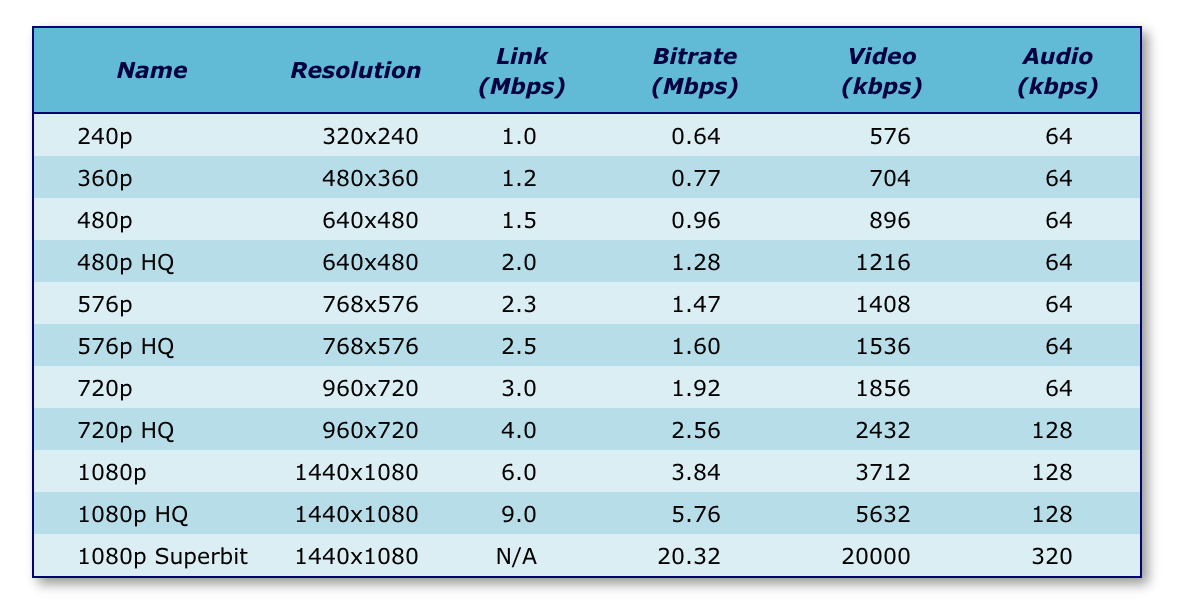

比特率(Bit Rate)/码流(Data Rate)/码率

比特率这个词有多种翻译,比如码率等,表示经过编码(压缩)后的音频数据每秒钟需要用多少个比特来表示,而比特就是二进制里面最少的单位,要么是0,要么是1。比特率与音视频压缩的关系简单的说就是比特率越高音视频的质量就越好,但编码后的文件就越大;如果比特率越少则情况刚好相反。

下面是不同类型的比特率编码

| Title | Raw Title | Detail |

|---|---|---|

| CBR | Constant Bit Rate | 恒定比特率 |

| VBR | Variable Bit Rate | 动态比特率 |

| MBR | Mutable Bit Rate | 多比特率编码 |

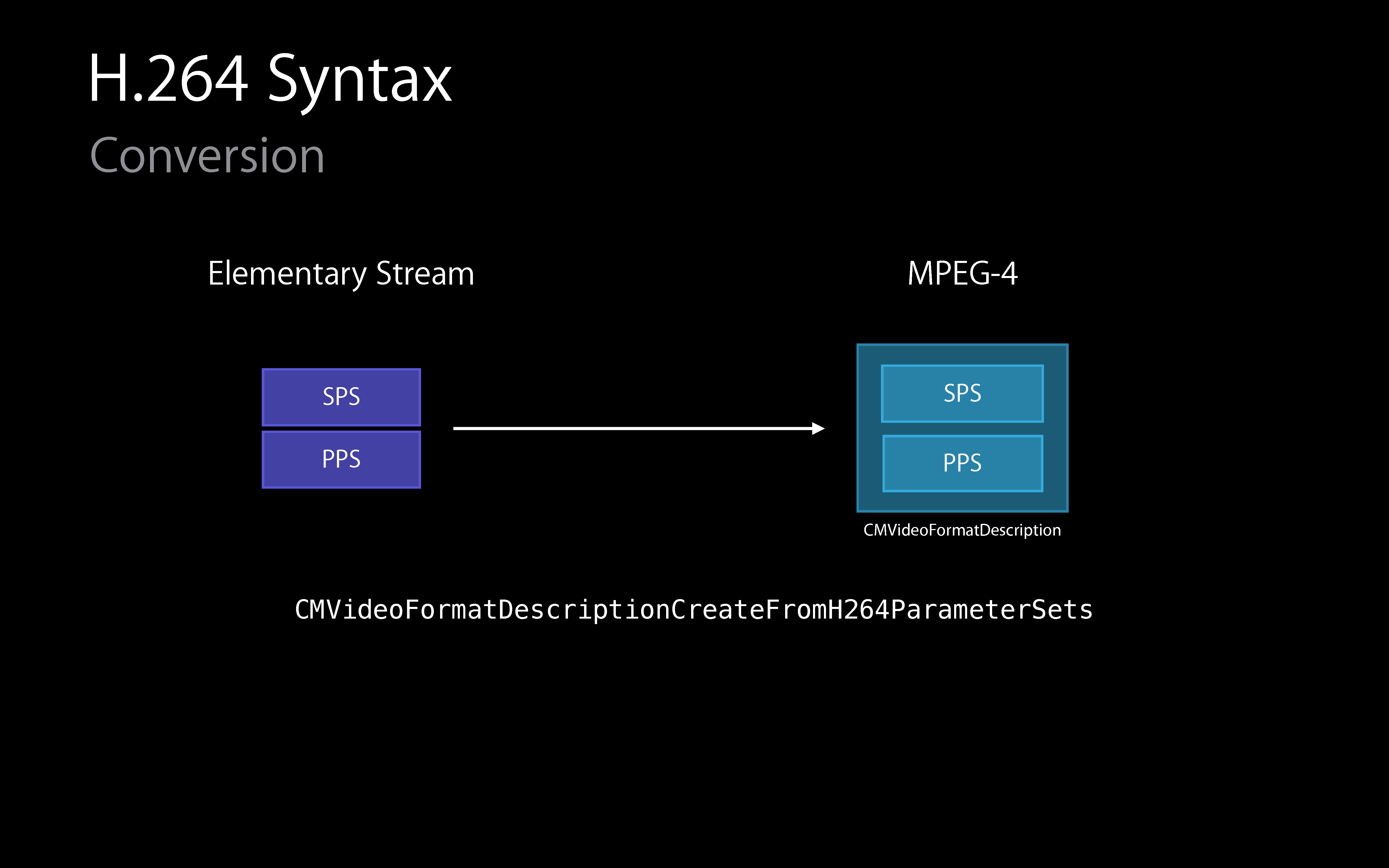

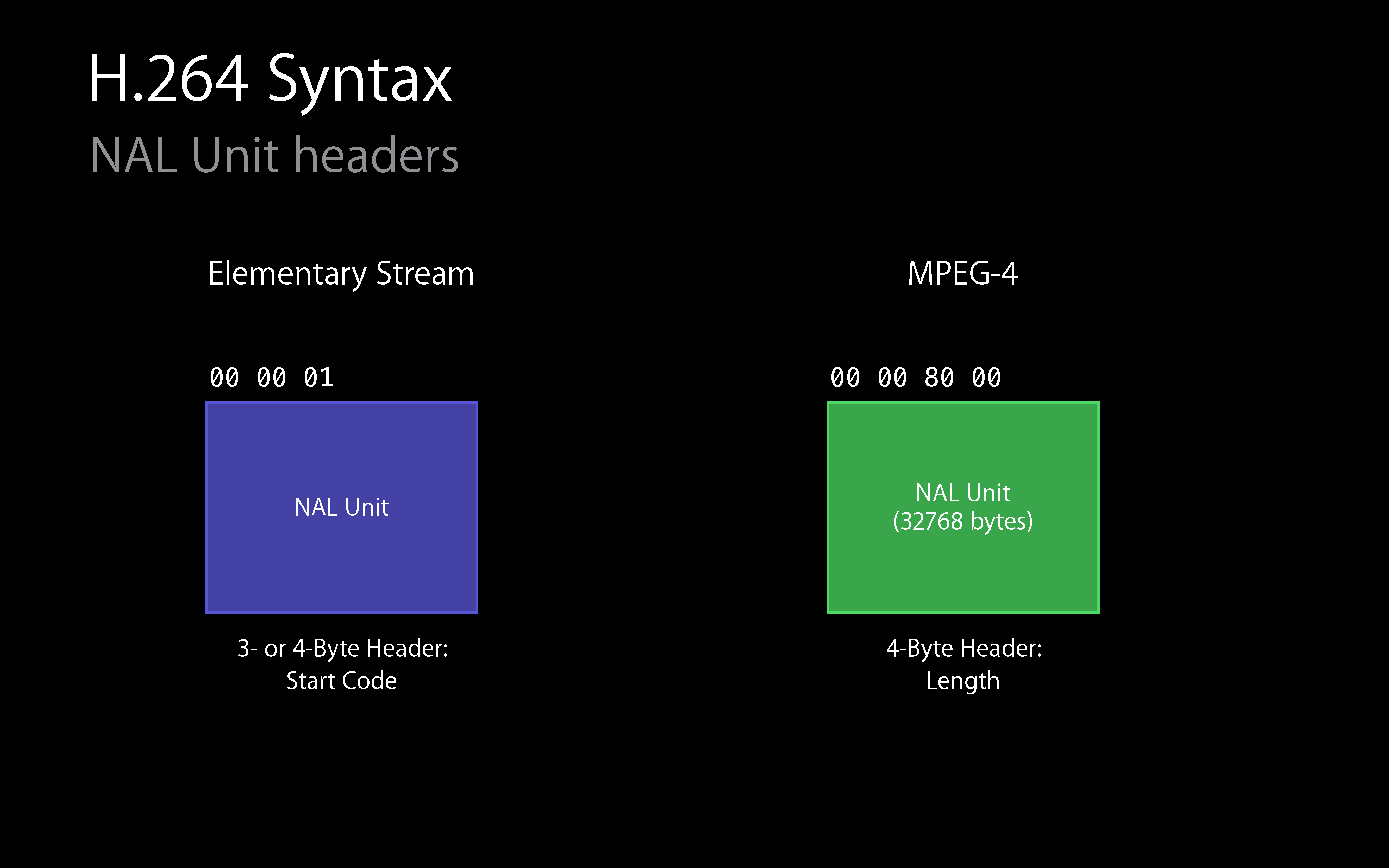

NALU

H.264 stream consists of a sequence of NAL Units (NALUs)

H.264码流由一系列NALU单元组成。个人理解就是压缩,传输中最小的数据单元。

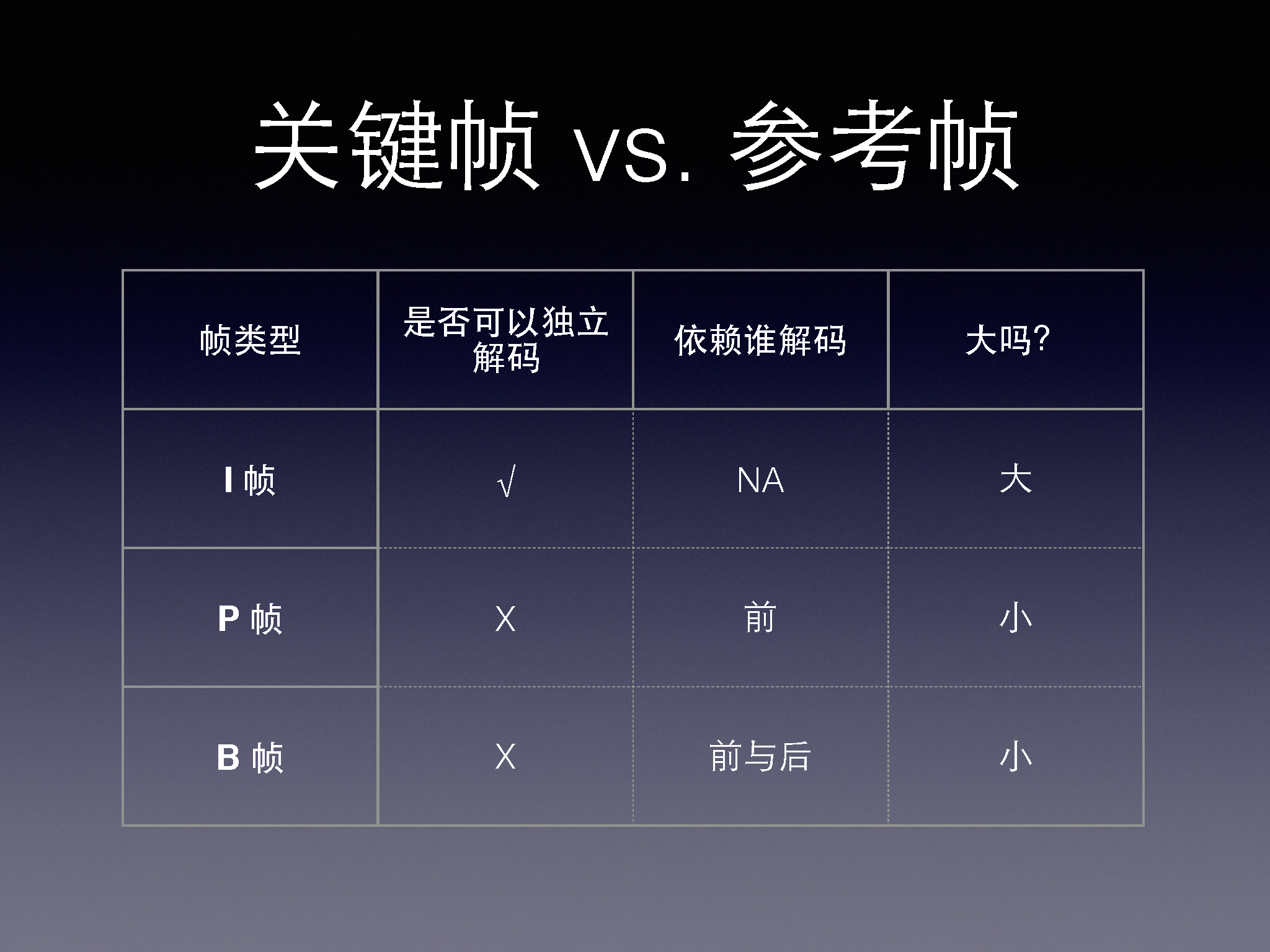

I、B、P Frames

| Frame | Detail |

|---|---|

| I Frame | I 帧是内部编码帧(也称为关键帧IDR) |

| B Frame | B 帧是双向内插帧(双向参考帧) |

| P Frame | P 帧是前向预测帧(前向参考帧) |

I 帧是一个完整的画面,而 P 帧和 B 帧记录的是相对于 I 帧的变化。如果没有 I 帧,P 帧和 B 帧就无法解码。

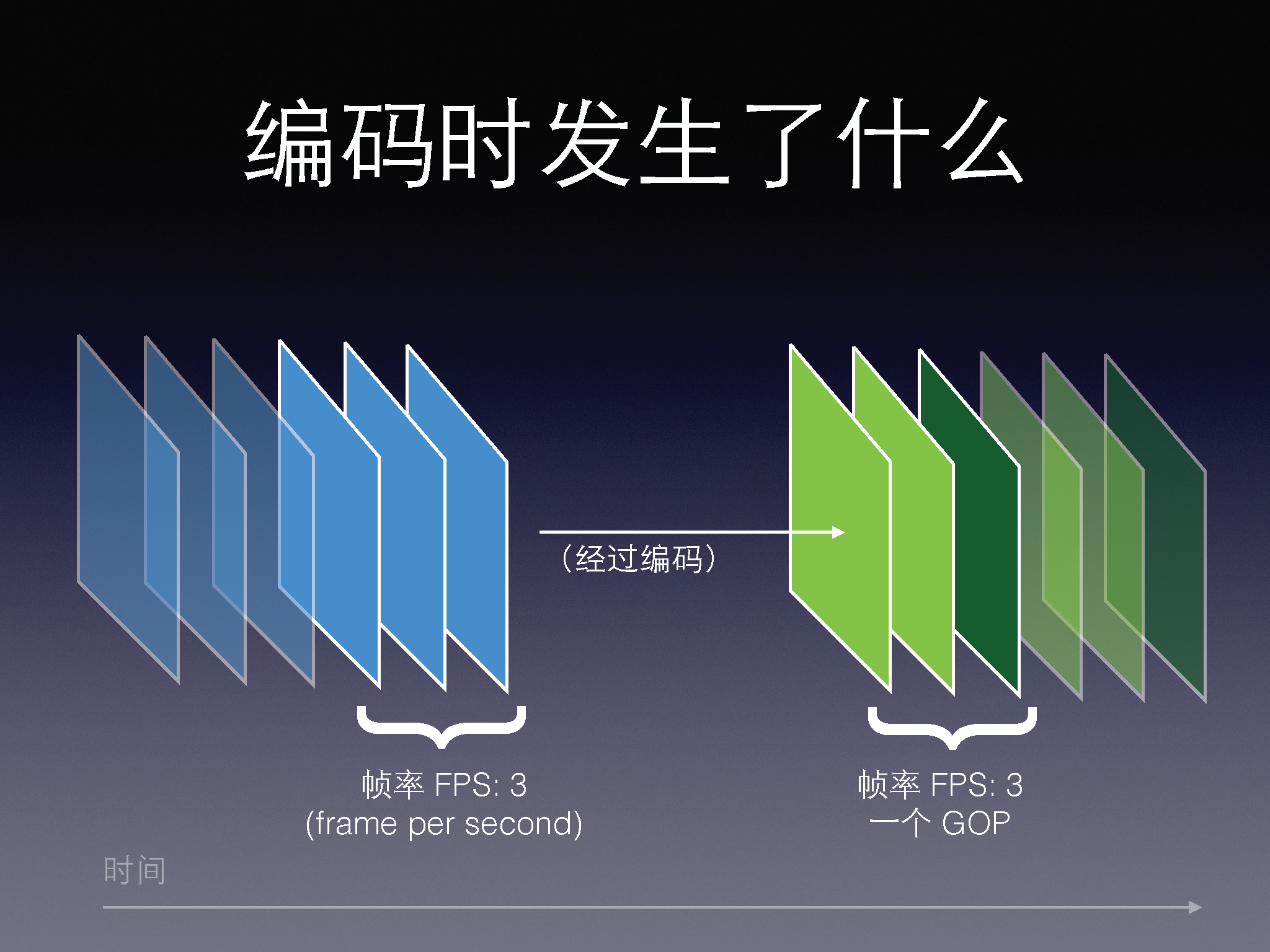

GOP(Group Of Picture)

GOP ( Group of Pictures ) 是一组连续的画面,由一张 I 帧和数张 B / P 帧组成,是视频图像编码器和解码器存取的基本单位,它的排列顺序将会一直重复到影像结束。GOP 体现了关键帧的周期,也就是两个关键帧之间的距离,即一个帧组的最大帧数。假设一个视频的恒定帧率是 24fps(即1秒24帧图像),关键帧周期为 2s,那么一个 GOP 就是 48 张图像。一般而言,每一秒视频至少需要使用一个关键帧。增加关键帧个数可改善画质(GOP 通常为 FPS 的倍数),但是同时增加了带宽和网络负载。

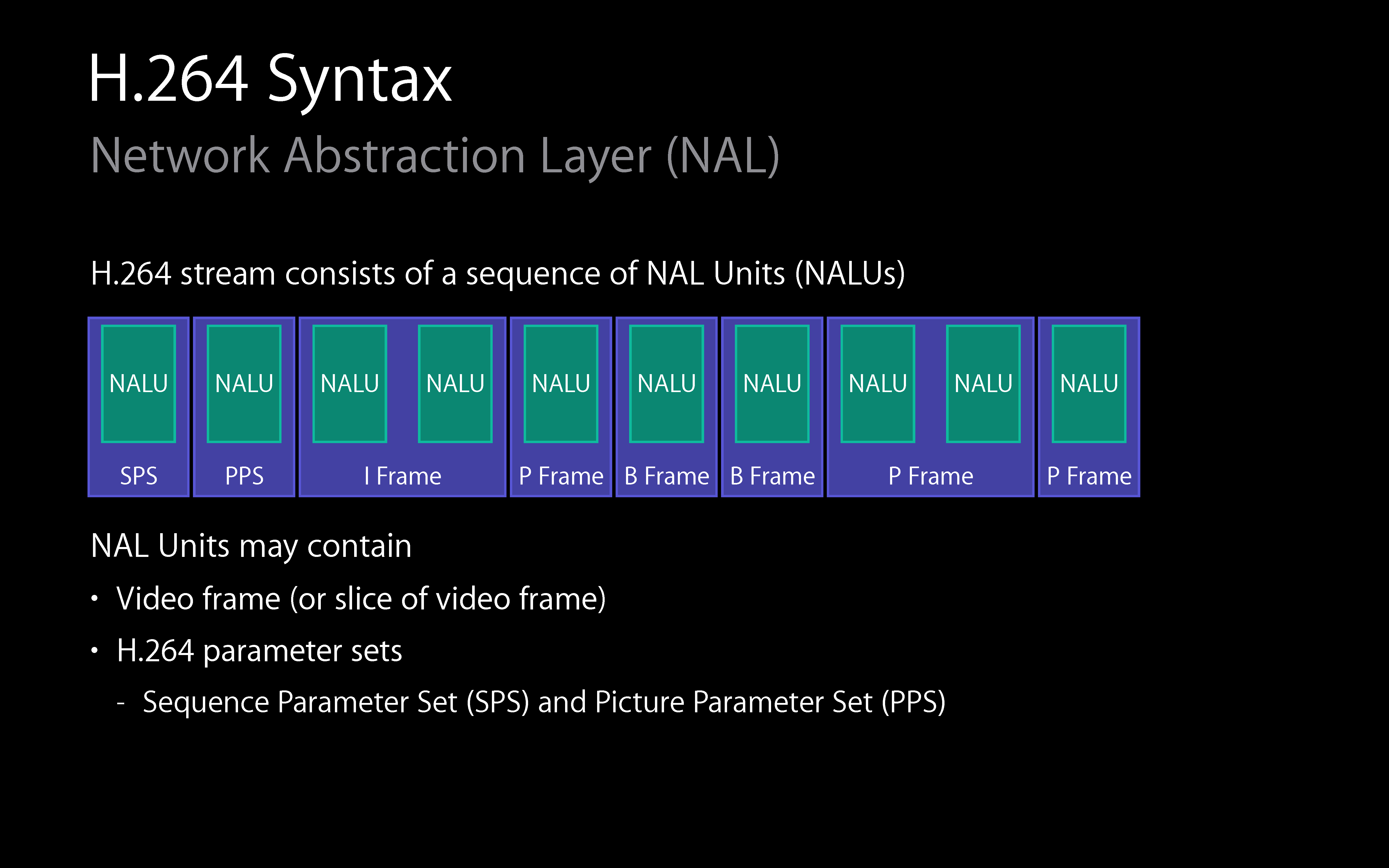

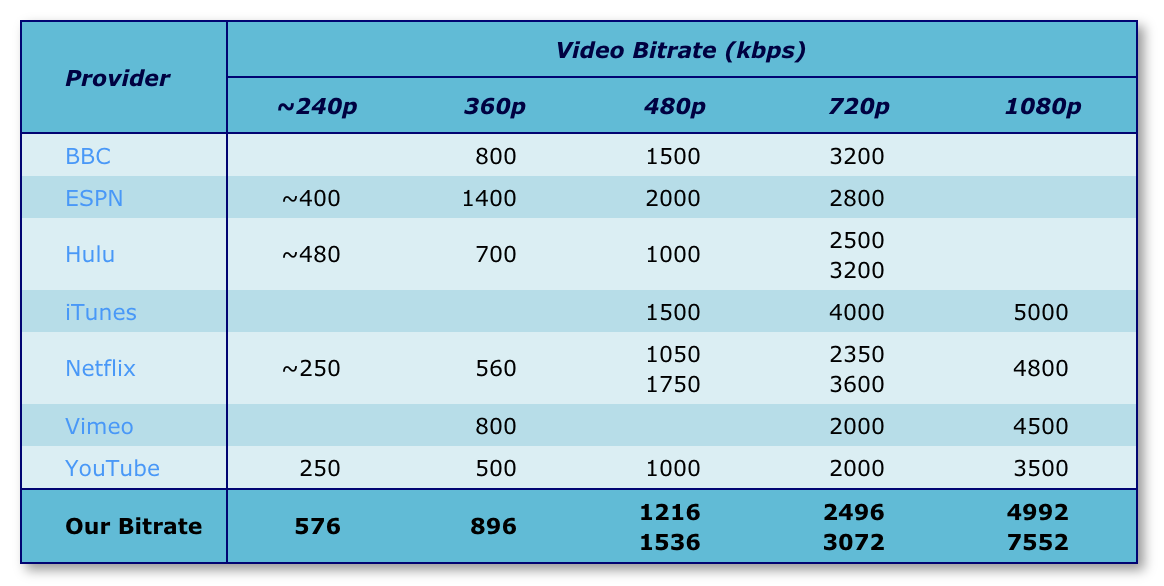

SPS && PPS

SPS(Sequence Parameter Set)、PPS(Picture Parameter Set). Both entities contain information that an h.264 decoder needs to decode the video data, for example the resolution and frame rate of the video.

包含视频的信息,例如分辨率、码率等。

The h.264 Sequence Parameter Set SPS & PPS in H.264 详解 H264码流中SPS PPS详解

Summary

下面一张图解释了NALU和SPS、PPS、I Frame、B Frame、P Frame之间的关系

NALU中可以包含视频图像数据(I Frame、P Frame、B Frame或视频帧片段)、SPS、PPS。可能很多个NALU才能组成一帧。

下面一张图解释了NALU的两种不同的格式Elementary Stream 和 MPEG-4 格式,一个是为了方便传输,一个是为了方便存储。

顺序播放和随机读取。

Decompress && Compress

下面介绍的是如何用VideoToolbox将未编码的CMSampleBuffer(CVPixelBuffer)与已编码的CMSampleBuffer(CMBlockBuffer)的相互转换。

Decompress

解码流程:

- 使用

VTDecompressionSessionCreate(_:_:_:_:_:_:_:_:_:_:)创建编码会话 - 使用

VTSessionSetProperty(_:_:_:)或者VTSessionSetProperties(_:_:)设置会话参数 - 使用

VTDecompressionSessionDecodeFrame(_:_:_:_:_:_:_:)编码视频帧,在VTDecompressionOutputCallbackRecord得到编码后的结果。 - 使用

VTCompressionSessionCompleteFrames(_:_:)来强制结束并完成编码。 - 编码完成之后使用

VTCompressionSessionInvalidate(_:)结束编码,并释放内存。

这里的

VTDecompressionOutputCallbackRecord是一个struct

VTDecompressionSession

1 | /*! |

videoFormatDescription

1 | /*! |

A reference to a CMFormatDescription, a CF object describing media of a particular type (audio, video, muxed, etc).

1 | /*! @param allocator |

destinationImageBufferAttributes

1 | let destinationImageBufferAttributes: CFDictionary = [kCVPixelBufferOpenGLESCompatibilityKey: kCFBooleanTrue] as CFDictionary |

在输出的过程中,不要将YUV转换成BGRA的格式,否则将会在内存中有两份Buffer,降低性能。

outputCallback

解码回调回返回CVPixelBuffer,时间戳,解码错误码,丢弃帧。

1 | var record = VTDecompressionOutputCallbackRecord(decompressionOutputCallback: { (decompressionOutputRefCon, sourceFrameRefCon, status, infoFlags, imageBuffer, presentationTimeStamp, presentationDuration) in |

VTDecompressionSessionDecodeFrame

1 | open func decode(frame: CMSampleBuffer) { |

Asynchronously Decompress

1 | /*! |

- 在异步回调的时候会出现内部解码管道已经满了,这个时候当前解码会block住,直到有空闲的空间继续进行解码。

- 使用

VTDecompressionSessionWaitForAsynchronousFrames会等到所有的解码完成再返回。

VTDecompressionSessionCanAcceptFormatDescription

在解码的过程中如果遇到I帧,则需要更换sps和pps,这个时候可以用VTDecompressionSessionCanAcceptFormatDescription来判断是否能够在不创建新的session情况下继续进行解码,否则就需要创建新的session。

1 | /*! |

使用

VTDecompressionSessionInvalidate销毁旧的session。

Decompress Summary

- 当解码的过程中遇到I帧的时候,需要判断新的sps和pps组成的

CMVideoFormatDescription是否能够在不创建新的session情况下继续解码,否则需要重新创建session。这样做保证了在传输过程中如果一段数据出错则不会继续扩大影响。

Compress

编码流程:

- 使用

VTCompressionSessionCreate(_:_:_:_:_:_:_:_:_:_:)创建编码会话 - 使用

VTSessionSetProperty(_:_:_:)或者VTSessionSetProperties(_:_:)设置会话参数 - 使用

VTCompressionSessionEncodeFrame(_:_:_:_:_:_:_:)编码视频帧,在VTCompressionOutputCallback得到编码后的结果。 - 使用

VTCompressionSessionCompleteFrames(_:_:)来强制结束并完成编码。 - 编码完成之后使用

VTCompressionSessionInvalidate(_:)结束编码,并释放内存。

编码通俗的来说就是将多个无相关的帧编码成有相关性帧的一个过程。从摄像头输出的每个

CMSampleBuffer之间都没有关联,都是原始图像数据。而编码就是将连续帧相似的地方提取出来,然后压缩,去掉无用像素等,使得这些连续的帧关联在一起的过程。

VTCompressionSession

创建编码会话,指定编码器的类型为H.264(kCMVideoCodecType_H264)。

1 | /*! |

VTSessionSetProperty

Sets a property on a VideoToolbox session.

1 | /*! |

WebRTC

下面是WebRTC的配置代码。

1 | - (void)configureCompressionSession { |

1 | - (void)setEncoderBitrateBps:(uint32_t)bitrateBps { |

详细内容请看:WebRTC-RTCVideoEncoderH264

VTCompressionSessionPrepareToEncodeFrames

1 | /*! |

调用的目的就是告诉编码器分配相应的内存空间。可以不用调用,编码器会在第一次调用

VTCompressionSessionEncodeFrame的时候再分配。

VTCompressionSessionEncodeFrame

1 | /*! |

Compress Summary

很多人不知道如何设置的码率,最大帧间隔等这个参数,参照下面的设置就可以。

Advances

Decompress && Compress

Decompress H.264 Elementary Stream Flow

在这里将介绍如何将H.264码流解码。

Stream Datas => CMSampleBuffer

如何将数据流封装成CMSampleBuffer(CMBlockBuffer)

Elementary Stream => MPEG-4

- 使用

CMVideoFormatDescriptionCreateFromH264ParameterSets来将SPS和PPS封装成CMVideoFormatDescription

CMVideoFormatDescription

A synonym type used for manipulating video CMFormatDescriptions.

1 | typealias CMVideoFormatDescription = CMFormatDescription |

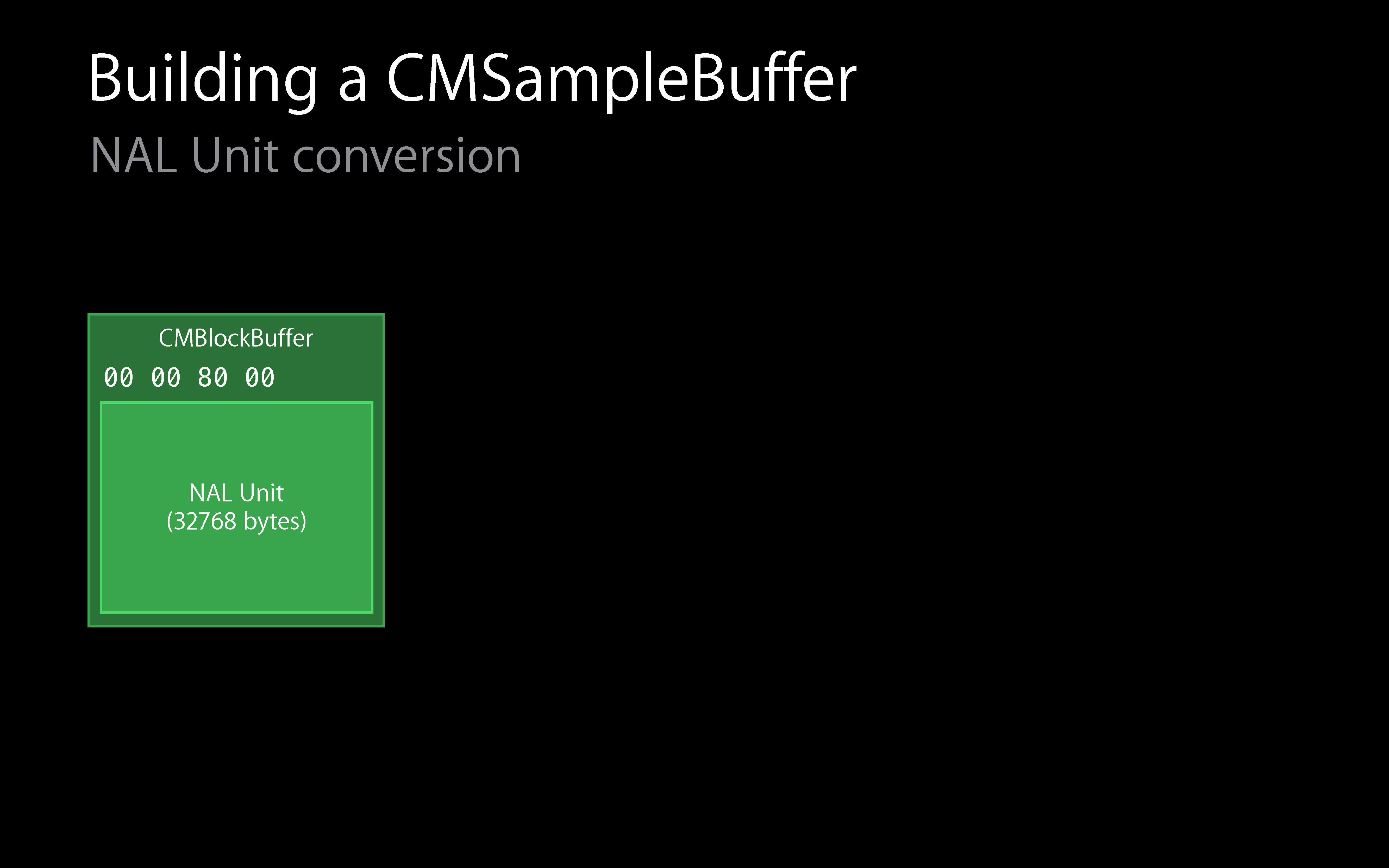

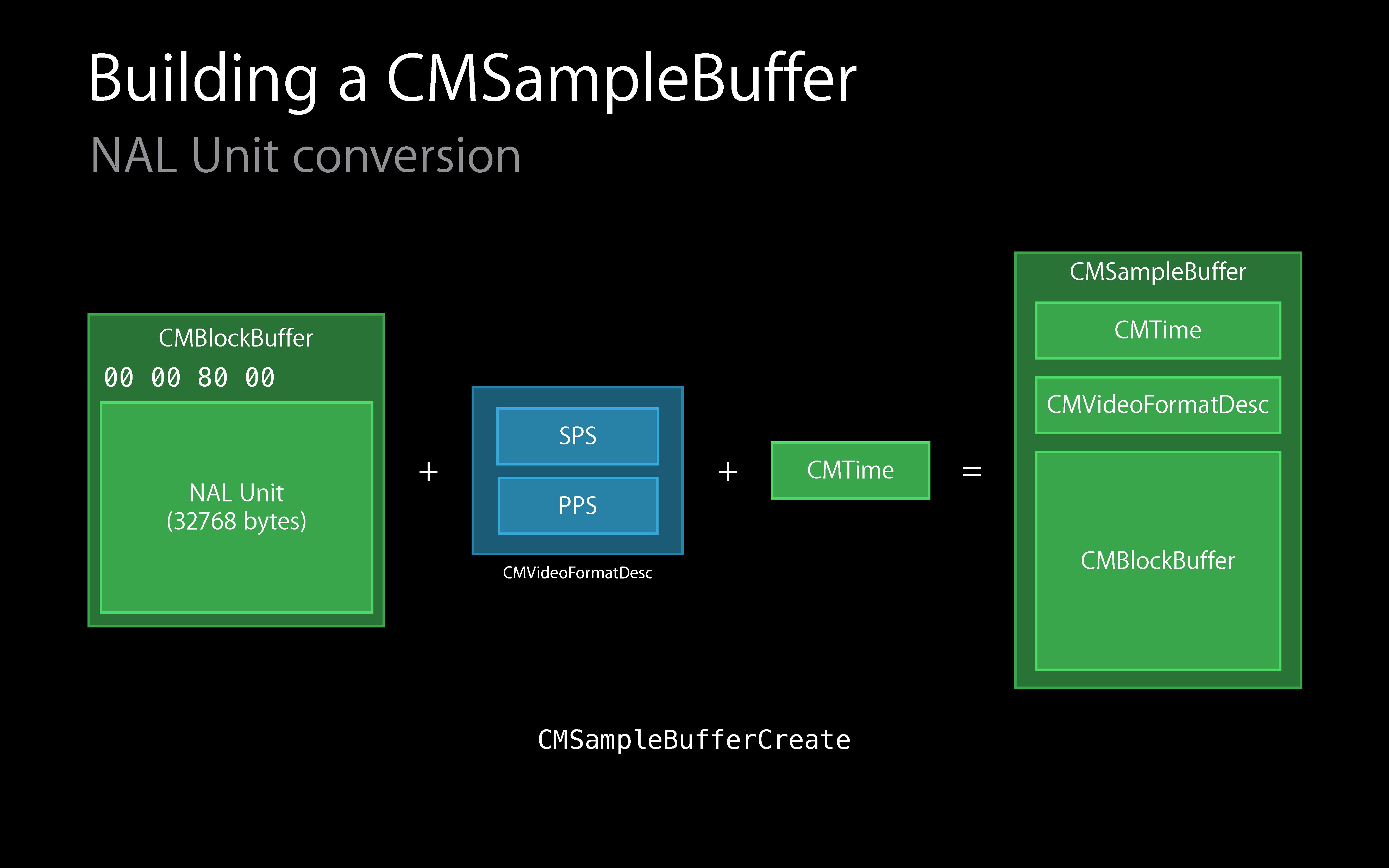

- 修改NALU的Header

NALU它主要有两种格式:Annex B 和 AVCC。也被称为 Elementary Stream 和 MPEG-4 格式,Annex B格式以 0x000001 或 0x00000001 开头,AVCC 格式以所在的 NALU 的长度开头。

首先我们需要将Header的Start Code替换,然后将NAL Unit封装成

CMBlockBuffer,在这里可能Frame包含很多个NAL Unit,都需要包含进去。创建的方式我们使用CMBlockBufferCreateWithMemoryBlock。

最后

CMBlockBuffer+CMVideoFormatDesc+CMTime通过CMSampleBufferCreate生成CMSampleBuffer。也可以用

CMSampleBufferCreateReady生成已经ReadyCMSampleBuffer。

Compress

1 | // The following keys may be attached to individual samples via the CMSampleBufferGetSampleAttachmentsArray() interface: |

Summary

- 个人觉得用Objective-C写这个部分比较好,让Swift直接和C函数进行交互着实很难受,可以先用Objective-C包装一层,再用Swift进行编写内容。

- 使用VideoToolbox其实并没有任何的难度,难的是各种概念的理解,参数该如何设置,为什么要这么设置,以及如何处理编码、解码后的数据。